Data Hub

Remove organizational silos

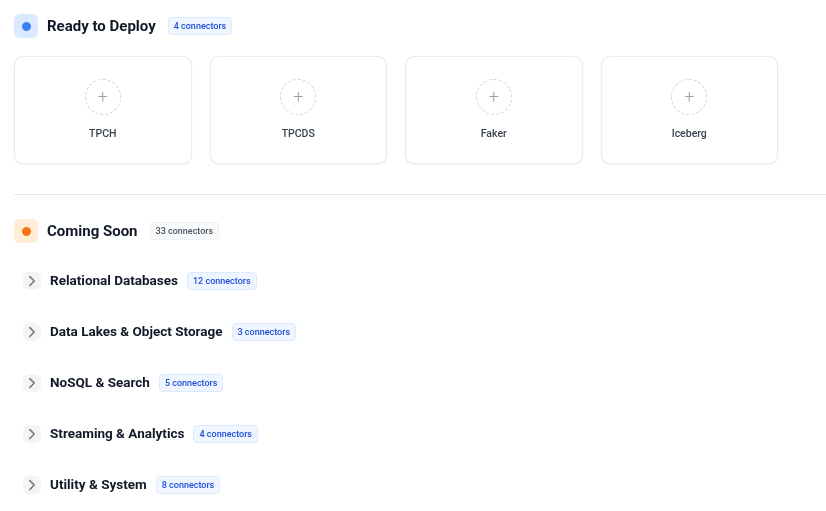

Universal connectors for your existing data sources

Overview

Turn your scattered data sources into a unified analytics powerhouse. Built on Trino's distributed SQL engine and Apache Iceberg's table format, Data Hub lets you query across PostgreSQL, MongoDB, S3, and 30+ connectors as if they were one database. No more ETL headaches - just pure analytical power.

Technical Specifications

Use Cases

Cross-source analytics

Join PostgreSQL with MongoDB in a single query

Complex KPI computing

Advanced analytics across distributed datasets

Unified data lakehouse

All your data sources in one queryable platform

Hyperfluid in Action

The impossible join

Sarah needs to join customer data (PostgreSQL) with product interactions (MongoDB)

Steps

Result

Complex analytics that used to take weeks, now in real-time

The KPI revolution

Finance team calculates monthly revenue across 8 different systems

Steps

Result

Monthly reporting from 2 weeks to 30 minutes

The data time machine

Thanks to Iceberg, travel back in time across all your data (Coming 2025)

Steps

Result

Safe data experimentation without breaking production

Key Benefits

Interested in Data Hub?

Discover how this component can transform your data architecture.